Another case of title = TL;DR, because I haven’t posted an update in about a month now. I won’t lie, at this point there’s just so much that I can’t cover all the intricacies, but I’ll cover the big stuff that I’ve been working on.

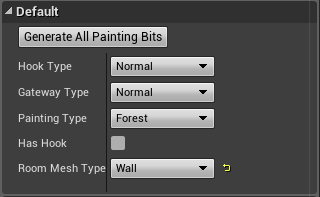

First and foremost, Save/Load has been my child for the last 3 weeks, and I’ve been repeating the same thing since I started it, “Saving and Loading is criminally underrated in games.” It is so much more complex than it seems. It seems like it would be as simple as just saving entire objects that you want, which would save all the variables about them – and that might be true for engines other than Unreal. But in Unreal, you need to save the variables of objects, which means you need references to those objects, and references, as it turns out, can go awry very easily very fast, especially when the entire purpose of those references is to change around (gateways on hooks do not stay on the same hook all game). Long bit short, it’s been a hassle, and I’m still bugfixing it even now into week 4. Luckily it’s mostly done, just minor kinks.

And uh, wow. I’m at this moment looking over my tasks for the past few weeks and it’s mostly just been saving and loading. It has consumed that much of my time.

Much of the other things I did was just bug fixing of previous systems. The ladders now glide a tad smoother, the menus have been slowly updated to support saving and loading, and the foyer (Have I mentioned we have a foyer? It’s this central hub area of the game that is Tucker’s pièce de résistance – it’s gorgeous) now has narrative playback so you can revisit all the audio you might’ve forgotten.

The big things are finally changing and settling into play in this next week. We’re looking at massive optimization changes, options for graphics, FOV, and other player/hardware goodies, and putting together a trailer for our game (which isn’t my doing, but I will be doing a bit of the dialogue).

In short, a lot has changed in the past month that I haven’t posted, and it’s the culmination of all 8 of us pouring ourselves into this. It’s really coming together, and I’m excited.

As always, until next time.